StylizedGS: Controllable Stylization for 3D Gaussian Splatting

3D neural style transfer framework with adaptable control based on 3D Gaussian Splatting representation

StylizedGS introduces a novel controllable 3D Gaussian stylization method for real-world scenes represented by 3D Gaussians. This method allows for the transfer of style features from a reference image to the entire 3D scene, enabling the creation of visually coherent novel views with transformed style features. StylizedGS operates at a notable inference speed, ensuring efficient synthesis of stylized scenes. The approach optimizes the geometry and color of 3D Gaussians to render images with faithful stylistic features while preserving semantic content.

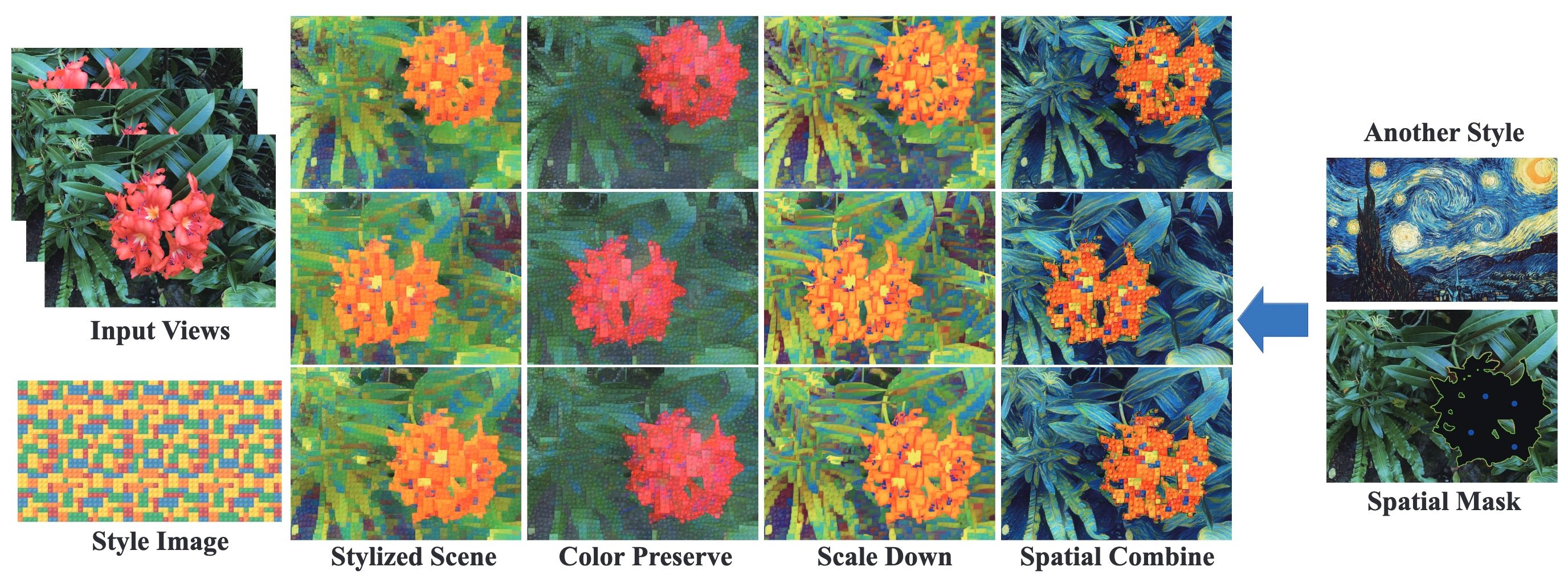

To achieve detailed style transfer, the method learns both optimal geometry and color parameters of 3D Gaussians. It follows a 2-step stylization framework, with the first step focusing on color match and reducing artifacts and noise, and the second step optimizing stylization with a nearest neighbor feature match loss. Depth preservation is also incorporated to maintain the overall learned 3D scene geometry. The approach includes effective loss functions and optimization schemes for flexible perceptual control, allowing users to adjust color, scale, and spatial regions during stylization.

Perceptual control in StylizedGS includes color control, where users can retain original hues or apply distinct color schemes from alternative style images. Spatial control allows users to specify regions in a scene for different styles, enhancing customization capabilities. The method also enables sequential control, allowing for precise adjustments across different factors like color, scale, and spatial regions. Ablation studies validate the importance of components like color and density fine-tuning, depth preservation, and the 3D Gaussian filter in achieving high-quality stylization results.

Comments

None