Arc2Face: A Foundation Model of Human Faces

Generates diverse photo-realistic images with high face similarity

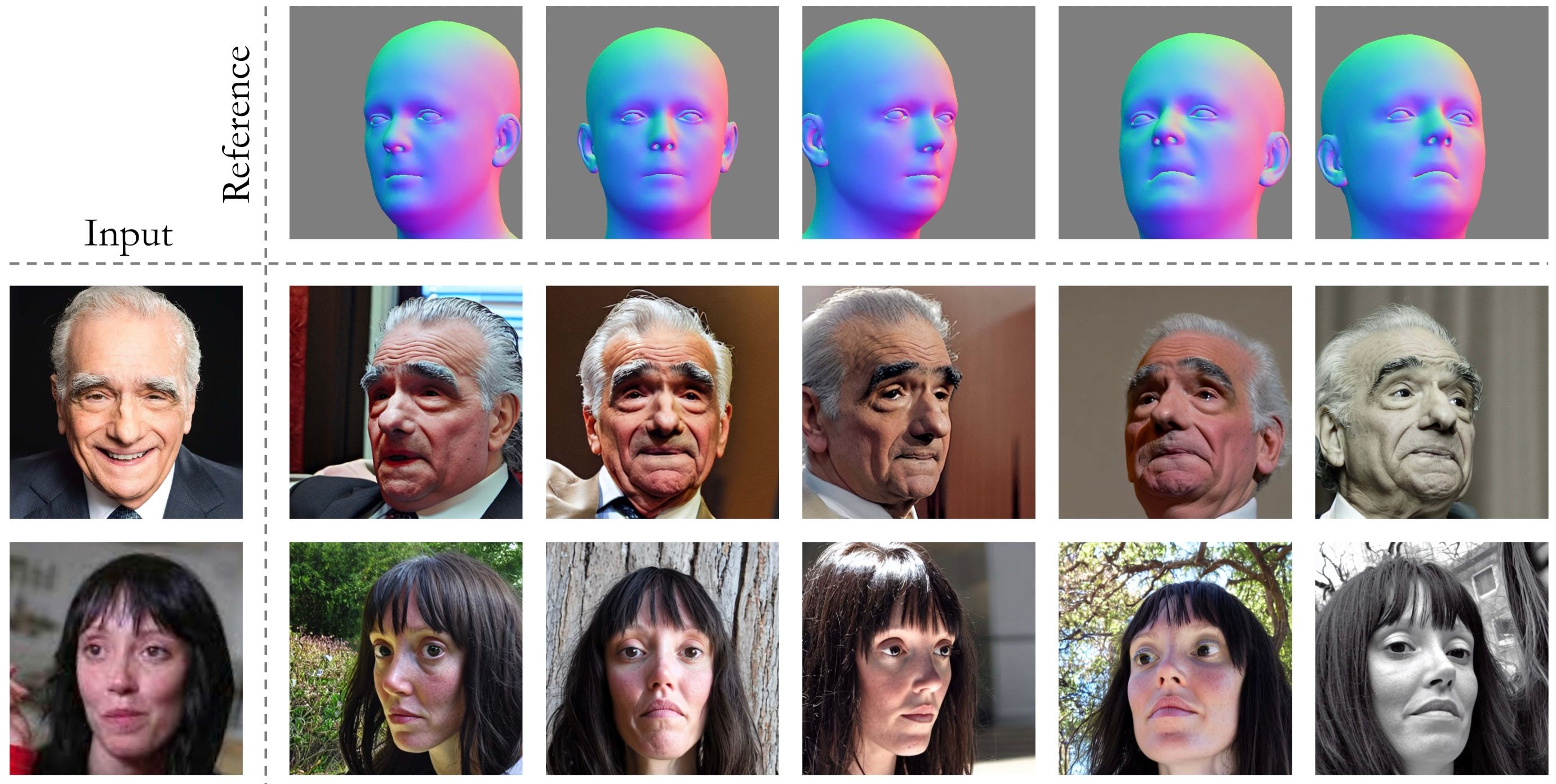

Unlike previous approaches that struggled with insufficient identities in high-resolution datasets, Arc2Face addresses this limitation by meticulously upsampling a significant portion of the WebFace42M database, the largest public dataset for face recognition (FR). By conditioning the pre-trained Stable Diffusion model on ID features, Arc2Face can efficiently generate images based solely on ArcFace embeddings, without the need for additional text prompts.

The method involves processing the ArcFace embeddings through a text encoder, utilizing a frozen pseudo-prompt for compatibility, and projecting them into the CLIP latent space for cross-attention control. Through extensive fine-tuning, the text encoder is effectively transformed into a face encoder, tailored to project ArcFace embeddings accurately.

The resulting model focuses exclusively on ID embeddings, disregarding its initial language guidance. This streamlined approach ensures robustness and efficiency, enabling Arc2Face to achieve superior identity consistency and produce high-quality facial images within seconds. Additionally, the model's versatility allows it to extend to different input modalities, such as pose and expression, further enhancing its applicability in various tasks requiring ID consistency

Comments

None