StyleGaussian: Instant 3D Style Transfer with Gaussian Splatting

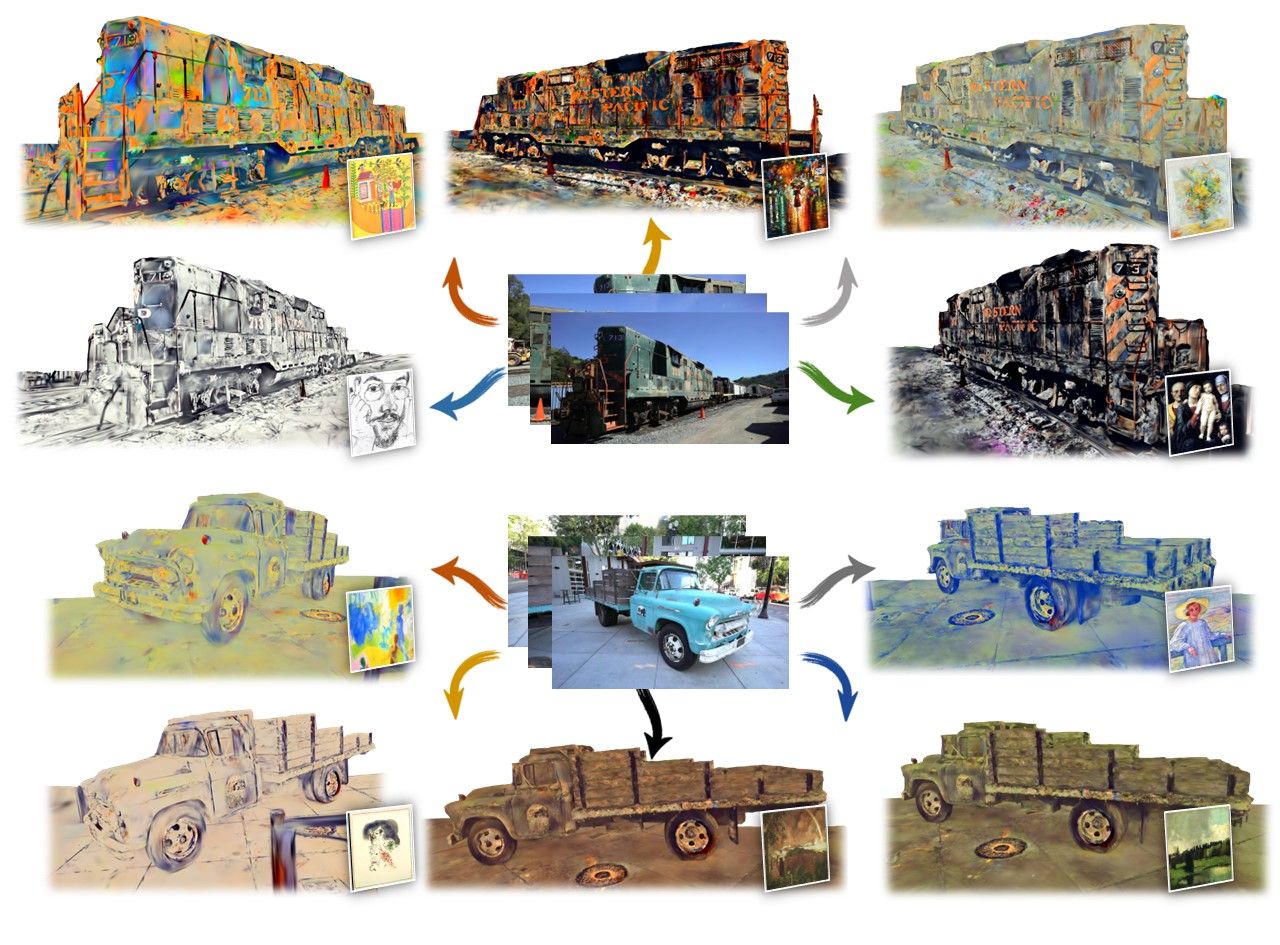

3D style transfer technique that enables instant transfer of any image's style to a 3D scene at 10 fps using 3D Gaussian Splatting and novel feature rendering strategies.

The paper introduces StyleGaussian, a novel 3D style transfer pipeline that enables instant style transfer while preserving real-time rendering and strict multi-view consistency. The pipeline consists of three main steps: embedding, transfer, and decoding. In the embedding process, VGG features are embedded into reconstructed 3D Gaussians for stylization, and an efficient feature rendering strategy is developed to render high-dimensional features while learning to embed them into the 3D Gaussians. The transfer step involves achieving stylization by aligning the channel-wise mean and variance of the embedded features with those of the style image, similar to AdaIN. Finally, the decoding step utilizes a KNN-based 3D CNN to adeptly decode the stylized features of 3D Gaussians to RGB without impairing multi-view consistency.

The feature embedding process involves assigning each Gaussian a learnable feature parameter and rendering the feature of each pixel in the same way as rendering RGB. The rendered features are then optimized using the L1 loss objective between the rendered features and the ground truth VGG features. The efficient feature rendering strategy first renders low-dimensional features and then maps them to high-dimensional features, enabling the derivation of the high-dimensional feature for each Gaussian effectively and efficiently.

The decoding step is designed to preserve strict multi-view consistency and involves using a 3D CNN that employs the K-nearest neighbors of each Gaussian as a sliding window and formulates convolution operations to match the sliding window. This approach allows for zero-shot 3D style transfer without requiring any optimization for new style images. Additionally, the paper discusses the ability of StyleGaussian to handle a wide range of style exemplars and demonstrates its effectiveness in achieving superior style transfer quality with better style alignment and content preservation compared to other zero-shot radiance field style transfer methods. The method also enables smooth interpolation between different styles at inference time and proposes a decoder initialization process to mitigate the time-consuming pre-scene training requirement.

Comments

None