V-IRL: Grounding Virtual Intelligence in Real Life

Scalable platform powering AI agents that interact with a virtual representation of the real world, bridging the gap between text-centric AI environments and real-world sensory experiences.

In the ever-evolving landscape of artificial intelligence, V-IRL aims to revolutionize how AI agents interact with and understand the world around them. At its core, V-IRL seeks to bridge the chasm between the text-centric digital realms of current AI systems and the rich sensory experiences that define human existence.

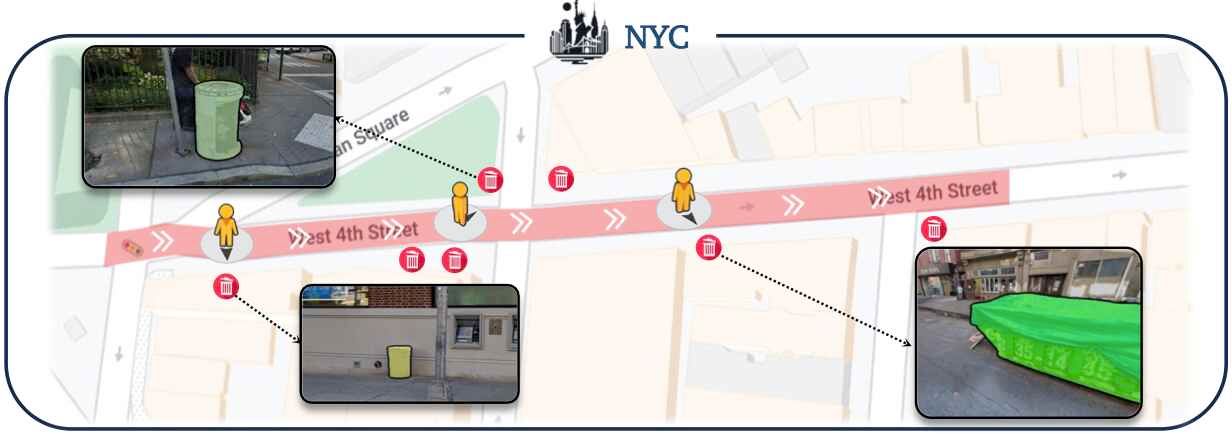

Imagine AI agents navigating through virtual replicas of real cities, seamlessly integrating geographic coordinates and street view imagery to ground themselves in their surroundings. This is precisely what V-IRL enables—a scalable platform that brings AI agents into the virtual embodiment of our physical world.

While the concept of embodying AI agents in real-world environments isn't new, the practical limitations of physical hardware and cost have hindered its widespread adoption. V-IRL circumvents these barriers by providing a virtual playground where agents can roam freely, interact with diverse environments, and tackle practical tasks with sensory-rich perceptual data.

But V-IRL is more than just a sandbox for AI experimentation. It serves as a vast testbed for advancing open-world computer vision and embodied AI, offering structured access to a plethora of street view imagery and geospatial data spanning the globe. This expansive dataset enables researchers to measure progress in AI development on an unprecedented scale and diversity.

One of the most significant advantages of V-IRL is its ability to ground AI agents in real-world perception, a crucial aspect often overlooked in text-driven AI systems. By integrating street view imagery and geographic mapping APIs, V-IRL empowers agents to tackle perception-driven tasks, opening new frontiers in AI research and application.

Comments

None